This Academic Life

12.29.2004

Judging and grading

Taking a quick break from grading -- but actually, not so much of a break…and, as it turned out, not so quick.

This article from the Washington Post (free subscription required) provides yet another example of perhaps my least-favorite misuse of a philosophical term in popular discourse (right up there with the conflation of "deconstruct" and "analyze"):

To make a system of evaluation "objective," all personal discretion would have to be removed, so that "the facts" alone would determine how a performance was to be scored. This follows quite simply from the very definition of "objectivity," which means -- if it means anything at all -- that the truth of a statement derives not from the personal whims and impressions of an observer (which would be "subjectivity"), but from some inherent characteristics of the object under observation. To be more precise, this is what we might call classical objectivity, where "classical" means a number of things (pre-quantum physics, pre-poststructuralism, pre-interpretivism). And it is ordinarily opposed to "subjectivity," i.e. an epistemological stance in which the truth of a statement derives from the knowing subject's personal and potentially idiosyncratic habits of thought.

So let's look this new judging system for figure-skating a bit, courtesy of the International Skating Union's official website:

Well, how about in the portion of the score that replaces the old "artistry" rating -- the place where Michelle Kwan traditionally cleaned up and thus defeated skaters whose technical competence was arguably superior?

Precisely the same thing can be said of "grading rubrics," a craze presently sweeping its way through academia. I'm all for spelling out course requirements and for telling students that when I grade an essay I am looking for a clear thesis, argumentative support for that thesis, an effort to refute counter-arguments, a judicious use of examples when required, etc. But I am under no illusions that this makes my grading more "objective." More precise, yes. More defensible, in that I can point to specific components where the student's performance was lacking, yes. Hence, more transparent. But in the end I, like the figure-skating judges, am still doing the same old thing: making expert judgments about how well a particular performance was executed.

Is there a way to eliminate this? It'd sure make grading go faster … what about giving solely multiple-choice exams? That would be the equivalent of replacing all baseball umpires with the QuesTec automated system for evaluating balls and strikes, I think. In so doing, it would convert a "ball" and a "strike" into purely positional claims: a ball went wide of the plate or was too high or too low, a strike managed to cross the plate at the proper height. And it would make the pitching of balls and strikes into a very mechanical affair indeed, one from which virtually every element of human discretion and judgment had been removed (or, at any rate, formalized and institutionalized into a locally stable structure of rules that restricted agency much moreso than presently exists in baseball). Ditto for a multiple-choice test, which would basically eliminate the elements of individual discretion and judgment from an evaluation of a student's performance, and instead simply record a measurement of how many of the pencil-marks attributed to the movement of her or his hand ended up in the proper space on the page.

But would such a system be "objective"? Would it generate "objective" measurements of performance? I don't think so. While such a system would go well beyond the channelling of discretion exemplified in the ISU judging system or in the grading rubric on any of my syllabi, and would essentially eliminate the need for a human judge to make a determination, it would in no way eliminate discretion and judgment per se. Instead, it would hard-wire a certain set of standards into the apparatus of evaluation, and thus take the immediate need to interpret results out of the hands of the observers present at the time. But it would in no way eliminate the observer-dependence of the results thus obtained, even though the "observer" in question would now be a machine -- a machine that was implementing standards generated by ordinary process of social transaction.

To equate a procedure for taking evaluation out of the hands of particular individuals -- or even out of the hands of any particular individual -- with "objectivity" in the classical sense is a peculiar bit of philosophical sleight-of-hand that simply reinforces the popular notion that there are only two options for a knowledge-claim: either the claim is "objective," meaning true because it corresponds to some innate dispositional characteristic of the universe, or "subjective," meaning true because someone arbitrarily declared that it was true. The sleight of hand here involves the notion of a "dispositional essence," whether this involves a student's "intelligence" or a pitcher's "talent" or a skater's "skill"; if we grant that such occult essences are the targets of our evaluation techniques, then it follows that if we all agree on a standard and then step out of the way, the dispositional essence of the student/pitcher/skater will shine through. Voila, "objectivity" -- in the classical, dualist sense.

[This is how John Searle manages to sustain the argument that one can make epistemically objective statements about ontologically subjective -- i.e. socially constructed -- phenomena: as long as individual discretion is minimized or eliminated, the only other option is "objectivity."]

But do we need to make such an assumption -- and is it even helpful to do so? I am not convinced that it is. After all, figure skating and baseball, as well as final exams, are clearly social products, and in this sense to use a word like "objectivity" in its classical sense when referring to any component of them seems a bit misplaced. Social practices that have more or less firm sets of rules that constitute and govern them generate, not "objective" evaluations, but intersubjective ones; any consensuses that they generate are at least as much a product of interpretive activities between and among the participants as they are a product of anything happening "out there in the world" (wherever that might be). Any stability that we perceive in baseball or figure-skating or in a student's performance is a result of our ongoing interpretive activities, our transactions with the world, and cannot be definitively attributed to "the world" itself (whatever that might be).

This applies equally to evaluation situations involving a lot of individual discretion (old-style judging of figure skating, and most baseball umpiring situations), more structured and transparent forms of discretion (new-style judging, grading rubrics), and virtually no individual discretion (QuesTec, multiple-choice exams). None of these generate "objective" results. A student's grades, like a figure skater's scores or a baseball player's stats, provide a record of her or his performance in specific situations. No occult essences needed.

Of course, none of this means that Michelle Kwan isn't a terrific skater, or that my best students aren't fantastically intelligent and capable scholars. Of course they are -- that's how we define the terms. A .300 hitter hits .300 over the long-term, and we know this because they, well, hit .300 over the long term. This doesn't explain anything; "being a .300 hitter" is as little an occult essence as "being an A student" is. ("She got an A because she's an A student" is an empty tautology.) But it does provide information that we can use to classify the person and evaluate her or his performance, and perhaps spur them to try to do better in the future. And isn't this what grading and judging is for?

[Posted with ecto]

This article from the Washington Post (free subscription required) provides yet another example of perhaps my least-favorite misuse of a philosophical term in popular discourse (right up there with the conflation of "deconstruct" and "analyze"):

[Michelle] Kwan has yet to face the sport's new computer-oriented judging system in which a panel of judges grades each element of a skater's program as it unfolds. The system made its debut more than a year ago in the aftermath of a judging scandal at the 2002 Salt Lake Olympics … It is aimed at bringing more objectivity to a sport once considered rife with corruption and cheating. … Kwan said final preparations for the Jan. 11-16 U.S. figure skating championships in Portland, Ore., her only tuneup before the world championships, have been anything but relaxed. The U.S. championships will be contested under the old scoring system, a far more subjective one in which judges rank skaters only in two broad categories on a 6.0 scale.I have italicized the key terms. What bothers me here -- not a surprise, I'm sure -- is the somewhat bizarre notion that a judging system based on point ratings for individual performance components is somehow more "objective" than one based on two broad categories. This is a nonsensical claim, inasmuch as the judges are still evaluating the skater as she or he skates, and as such the element of personal discretion is in no way eliminated.

To make a system of evaluation "objective," all personal discretion would have to be removed, so that "the facts" alone would determine how a performance was to be scored. This follows quite simply from the very definition of "objectivity," which means -- if it means anything at all -- that the truth of a statement derives not from the personal whims and impressions of an observer (which would be "subjectivity"), but from some inherent characteristics of the object under observation. To be more precise, this is what we might call classical objectivity, where "classical" means a number of things (pre-quantum physics, pre-poststructuralism, pre-interpretivism). And it is ordinarily opposed to "subjectivity," i.e. an epistemological stance in which the truth of a statement derives from the knowing subject's personal and potentially idiosyncratic habits of thought.

So let's look this new judging system for figure-skating a bit, courtesy of the International Skating Union's official website:

2) Technical ScorePardon me for being obtuse, but where's the "objectivity" here? What I see is a set of criteria that individual judges can apply using their (presumably expert) discretion. And this means that a skater's performance rating depends on how she or he is judged by the experts to have executed particular elements.

When a skater/couple performs, each element of their program is assigned a base value. Base values of all recognised elements are published annually by the ISU. During the program, Judges will evaluate each element within a range of +3 to -3. This evaluation will either add to or deduct from the base value of the element. … When a skater executes an element, the Technical Specialist, monitored by the Technical Controller, will identify the element, and its respective point value will be listed on each Judge’s screen. … The Judge then grades the quality of the element within the range of +3 to -3. The sum of the base value added to the trimmed mean of the Grade of Execution (GOE) of each performed element will form the Total Element Score.

Well, how about in the portion of the score that replaces the old "artistry" rating -- the place where Michelle Kwan traditionally cleaned up and thus defeated skaters whose technical competence was arguably superior?

3) Program ComponentsHmm. Still no "objectivity" in sight. And when we start looking at the specific criteria that judges are supposed to take into account when issuing a score for a program component, the issue becomes even clearer. Let me just take two Program Components to illustrate the point:

In addition to the technical score, the Judges will award points on a scale from 0 to 10 with increments of .25 for the five Program Components to grade the overall presentation of the performance. These Program Components are skating skills, transitions, performance/execution, choreography and interpretation. Several factors, as detailed below, are to be taken into account when the Judges consider each component.

Skating Skills include:All it looks like the ISU has done is to spell out the elements that were previously wrapped up to form "artistry" as an omnibus rating. While this is undoubtedly an improvement, inasmuch as it gives a skater a better shot at seeing what is desired from the judges instead of remaining in the dark and simply receiving scores more or less at random, it has zippo implications for the "objectivity" of the score.

- Overall skating quality

- Multi-directional skating

- Speed and power

- Cleanness and sureness of edges

- Glide and flow

- Balance in ability of partners (pair skating and ice dancing)

- Unison (pair skating)

- Depth and quality of edges and ice coverage (ice dancing)

- One foot skating (ice dancing)

Choreography includes:

- Harmonious composition of the program

- Creativity and originality

- Conformity of elements, steps and movements to the music

- Originality, difficulty and variety of program pattern

- Distribution of highlights

- Utilization of space and ice surface

- Unison (pair skating)

Precisely the same thing can be said of "grading rubrics," a craze presently sweeping its way through academia. I'm all for spelling out course requirements and for telling students that when I grade an essay I am looking for a clear thesis, argumentative support for that thesis, an effort to refute counter-arguments, a judicious use of examples when required, etc. But I am under no illusions that this makes my grading more "objective." More precise, yes. More defensible, in that I can point to specific components where the student's performance was lacking, yes. Hence, more transparent. But in the end I, like the figure-skating judges, am still doing the same old thing: making expert judgments about how well a particular performance was executed.

Is there a way to eliminate this? It'd sure make grading go faster … what about giving solely multiple-choice exams? That would be the equivalent of replacing all baseball umpires with the QuesTec automated system for evaluating balls and strikes, I think. In so doing, it would convert a "ball" and a "strike" into purely positional claims: a ball went wide of the plate or was too high or too low, a strike managed to cross the plate at the proper height. And it would make the pitching of balls and strikes into a very mechanical affair indeed, one from which virtually every element of human discretion and judgment had been removed (or, at any rate, formalized and institutionalized into a locally stable structure of rules that restricted agency much moreso than presently exists in baseball). Ditto for a multiple-choice test, which would basically eliminate the elements of individual discretion and judgment from an evaluation of a student's performance, and instead simply record a measurement of how many of the pencil-marks attributed to the movement of her or his hand ended up in the proper space on the page.

But would such a system be "objective"? Would it generate "objective" measurements of performance? I don't think so. While such a system would go well beyond the channelling of discretion exemplified in the ISU judging system or in the grading rubric on any of my syllabi, and would essentially eliminate the need for a human judge to make a determination, it would in no way eliminate discretion and judgment per se. Instead, it would hard-wire a certain set of standards into the apparatus of evaluation, and thus take the immediate need to interpret results out of the hands of the observers present at the time. But it would in no way eliminate the observer-dependence of the results thus obtained, even though the "observer" in question would now be a machine -- a machine that was implementing standards generated by ordinary process of social transaction.

To equate a procedure for taking evaluation out of the hands of particular individuals -- or even out of the hands of any particular individual -- with "objectivity" in the classical sense is a peculiar bit of philosophical sleight-of-hand that simply reinforces the popular notion that there are only two options for a knowledge-claim: either the claim is "objective," meaning true because it corresponds to some innate dispositional characteristic of the universe, or "subjective," meaning true because someone arbitrarily declared that it was true. The sleight of hand here involves the notion of a "dispositional essence," whether this involves a student's "intelligence" or a pitcher's "talent" or a skater's "skill"; if we grant that such occult essences are the targets of our evaluation techniques, then it follows that if we all agree on a standard and then step out of the way, the dispositional essence of the student/pitcher/skater will shine through. Voila, "objectivity" -- in the classical, dualist sense.

[This is how John Searle manages to sustain the argument that one can make epistemically objective statements about ontologically subjective -- i.e. socially constructed -- phenomena: as long as individual discretion is minimized or eliminated, the only other option is "objectivity."]

But do we need to make such an assumption -- and is it even helpful to do so? I am not convinced that it is. After all, figure skating and baseball, as well as final exams, are clearly social products, and in this sense to use a word like "objectivity" in its classical sense when referring to any component of them seems a bit misplaced. Social practices that have more or less firm sets of rules that constitute and govern them generate, not "objective" evaluations, but intersubjective ones; any consensuses that they generate are at least as much a product of interpretive activities between and among the participants as they are a product of anything happening "out there in the world" (wherever that might be). Any stability that we perceive in baseball or figure-skating or in a student's performance is a result of our ongoing interpretive activities, our transactions with the world, and cannot be definitively attributed to "the world" itself (whatever that might be).

This applies equally to evaluation situations involving a lot of individual discretion (old-style judging of figure skating, and most baseball umpiring situations), more structured and transparent forms of discretion (new-style judging, grading rubrics), and virtually no individual discretion (QuesTec, multiple-choice exams). None of these generate "objective" results. A student's grades, like a figure skater's scores or a baseball player's stats, provide a record of her or his performance in specific situations. No occult essences needed.

Of course, none of this means that Michelle Kwan isn't a terrific skater, or that my best students aren't fantastically intelligent and capable scholars. Of course they are -- that's how we define the terms. A .300 hitter hits .300 over the long-term, and we know this because they, well, hit .300 over the long term. This doesn't explain anything; "being a .300 hitter" is as little an occult essence as "being an A student" is. ("She got an A because she's an A student" is an empty tautology.) But it does provide information that we can use to classify the person and evaluate her or his performance, and perhaps spur them to try to do better in the future. And isn't this what grading and judging is for?

[Posted with ecto]

12.27.2004

"Vacation"

[slightly modified from dictionary.com ]

n.

Approximate schedule for this week, the first week of "vacation":

27 December

am: work on revisions of co-authored article for resubmission.

pm: grade at least six final exams from grad class, and finalize semester grades for those students.

28 December

am: grade at least eight final exams from grad class, and finalize semester grades for those students.

pm: work on reading through immense stack of material that needs to be discussed in opening and closing chapters of book manuscript.

29 December

am: grade remaining (at least eight) final exams from grad class, and finalize semester grades for those students. Submit final grades for that course.

pm: continue to work on reading through immense stack of material that needs to be discussed in opening and closing chapters of book manuscript.

30 December

am: grade at least six final exams from undergrad class, and finalize semester grades for those students.

pm: continue to work on reading through immense stack of material that needs to be discussed in opening and closing chapters of book manuscript.

31 December

am: grade at least six final exams from undergrad class, and finalize semester grades for those students.

pm: maybe read a bit; spend time with family for New Year's Eve.

1 January

clean office; work on throwing out excess crap in house, only some of which is directly due to Christmas gifts received; spend some family time with wife&kids.

2 January

am: church.

pm: grade remaining final exams from undergrad class, and finalize semester grades for those students. Submit final grades for that course. Also take care of any other lingering grading (independent studies, internship papers, etc.) and submit those grades. Finally be finished with semester two-plus weeks after it ends.

The next week before classes start up again needs to be spent working on the first and last chapters of my book, revising the rest of the ms. to make it conform to those chapters and their slight reframing of the argument to accord with what the press' board of directors wanted me to do, finalizing syllabi for Spring courses, and doing my bit of another co-authored piece that is also due mid-January -- the same time as the final book ms. is due.

I think that I would like to have "a period of time devoted to pleasure, rest, or relaxation." [Of course, in my case -- as for many academics, I'd venture -- such a period would involve thinking and reading in directions that I wanted to instead of in directions that I had to or was obligated to.] Maybe after I get tenure? Please?

[Posted with ecto]

n.

- A period of time devoted to pleasure, rest, or relaxation, especially one with pay granted to an employee.

- A holiday.

- A fixed period of holidays, especially one during which a school, court, or business suspends activities.

Approximate schedule for this week, the first week of "vacation":

27 December

am: work on revisions of co-authored article for resubmission.

pm: grade at least six final exams from grad class, and finalize semester grades for those students.

28 December

am: grade at least eight final exams from grad class, and finalize semester grades for those students.

pm: work on reading through immense stack of material that needs to be discussed in opening and closing chapters of book manuscript.

29 December

am: grade remaining (at least eight) final exams from grad class, and finalize semester grades for those students. Submit final grades for that course.

pm: continue to work on reading through immense stack of material that needs to be discussed in opening and closing chapters of book manuscript.

30 December

am: grade at least six final exams from undergrad class, and finalize semester grades for those students.

pm: continue to work on reading through immense stack of material that needs to be discussed in opening and closing chapters of book manuscript.

31 December

am: grade at least six final exams from undergrad class, and finalize semester grades for those students.

pm: maybe read a bit; spend time with family for New Year's Eve.

1 January

clean office; work on throwing out excess crap in house, only some of which is directly due to Christmas gifts received; spend some family time with wife&kids.

2 January

am: church.

pm: grade remaining final exams from undergrad class, and finalize semester grades for those students. Submit final grades for that course. Also take care of any other lingering grading (independent studies, internship papers, etc.) and submit those grades. Finally be finished with semester two-plus weeks after it ends.

The next week before classes start up again needs to be spent working on the first and last chapters of my book, revising the rest of the ms. to make it conform to those chapters and their slight reframing of the argument to accord with what the press' board of directors wanted me to do, finalizing syllabi for Spring courses, and doing my bit of another co-authored piece that is also due mid-January -- the same time as the final book ms. is due.

I think that I would like to have "a period of time devoted to pleasure, rest, or relaxation." [Of course, in my case -- as for many academics, I'd venture -- such a period would involve thinking and reading in directions that I wanted to instead of in directions that I had to or was obligated to.] Maybe after I get tenure? Please?

[Posted with ecto]

12.23.2004

Extremes

Has anyone else noticed that the papers/essays that take the longest to grade, and the students whose semester performance it takes the longest to evaluate, are the weakest ones? Maybe this is only true for me. The worse a paper/essay is, or the more marginal a student's performance, the more comments I want to make and the more guidance I want to give them.

There's a slight inverse effect too, in that really good papers/essays seem to take me longer to grade as well. Those students generally present no problems for their overall semester performance, though, so that's a bit of balance: more time for individual assignments, less time for the overall evaluation.

If the whole class was doing decently but neither extremely well nor extremely poorly, maybe this would all go faster.

[Posted with ecto]

There's a slight inverse effect too, in that really good papers/essays seem to take me longer to grade as well. Those students generally present no problems for their overall semester performance, though, so that's a bit of balance: more time for individual assignments, less time for the overall evaluation.

If the whole class was doing decently but neither extremely well nor extremely poorly, maybe this would all go faster.

[Posted with ecto]

Insanity

This may not come as a major revelation to anyone else, but it did come as a major revelation to me: the reason that I feel like I am drowning in grading is not because I am slow or inefficient or otherwise deficient, but because I have entirely too much grading to do in entirely too short a span of time. Consider the following observations:

Yesterday I worked pretty much all day at a steady clip, and got through 18 research papers. That's about nine hours of work, interspersed with a couple of brief "sanity breaks." Today I need to finish the last four papers for that course (about 2 hours; planning to get that done before lunch); then I have to run out to my campus office to correct a shipping error of Santa's (grumble grumble wife's present went to the wrong place grumble), and run a couple of errands on the way; then I'll stay in for a few hours and grade -- and I also have to triage my e-mail, which has gone basically unanswered for three days. So I'll get some grading done this afternoon, but not a lot. Say three hours, or six students from the 21-person class.

This leaves me with fifteen students from that class (say 8 hours), eighteen students from the other class (say 10-12 hours, since that's a more intensive class where I have to evaluate megabytes of simulation chat transcripts and blog entries in addition to final essays), and a few lingering proposals and independent study papers. Make it an even 24 hours from tonight -- but oh yeah, can't really do it on Friday, or Saturday, or Sunday, because of Christmas festivities. It's sad when Christmas starts looking like an obstacle, isn't it?

So I'll have to finish up over the semester break. Oh yeah, and I have to finalize my book ms. by the middle of January, as well as do my part on a co-authored book chapter, revise an article for resubmission, deal with administrative stuff that lingers from a study-abroad program I ran this summer, handle some stuff related to a professional association that I have a leadership position in…

Like I said, I am beginning to realize (slowly, slowly) that the problem may not be my inefficiency, but more my level of overcommitment. Got to take steps to deal with that somehow…when I find the time to think about how to accomplish that…

[Posted with ecto]

- this semester I have three classes; their enrollments are 24, 22, and 18, for a total number of 64 students. This does not include a number of independent studies, internships which I am supervising, theses which I am advising, etc.

- my university does not permit TAs, so there's no one else to help out with the grading.

- the kind of courses that I traditionally teach do not lend themselves to blue-book, in-class final exams, so all of these courses had final take-home essays or research papers.

- in addition, discussion-intensive classes, which two of my courses this semester were, take considerably more time to grade come the end of the semester, since evaluating a student's performance in class discussion is rather more complicated than simply inserting numbers into a spreadsheet and seeing what pops out. [Not that that kind of end-of-semester grading is particularly easy or self-evident either; my point is only that it takes less time per student than the kind of thing that I am presently wading through.]

- it takes me between half an hour and 45 minutes to wrap up a student's semester, which means grading their final exam/paper and determining their semester grade.

- most of the exams came on on Monday the 20th.

Yesterday I worked pretty much all day at a steady clip, and got through 18 research papers. That's about nine hours of work, interspersed with a couple of brief "sanity breaks." Today I need to finish the last four papers for that course (about 2 hours; planning to get that done before lunch); then I have to run out to my campus office to correct a shipping error of Santa's (grumble grumble wife's present went to the wrong place grumble), and run a couple of errands on the way; then I'll stay in for a few hours and grade -- and I also have to triage my e-mail, which has gone basically unanswered for three days. So I'll get some grading done this afternoon, but not a lot. Say three hours, or six students from the 21-person class.

This leaves me with fifteen students from that class (say 8 hours), eighteen students from the other class (say 10-12 hours, since that's a more intensive class where I have to evaluate megabytes of simulation chat transcripts and blog entries in addition to final essays), and a few lingering proposals and independent study papers. Make it an even 24 hours from tonight -- but oh yeah, can't really do it on Friday, or Saturday, or Sunday, because of Christmas festivities. It's sad when Christmas starts looking like an obstacle, isn't it?

So I'll have to finish up over the semester break. Oh yeah, and I have to finalize my book ms. by the middle of January, as well as do my part on a co-authored book chapter, revise an article for resubmission, deal with administrative stuff that lingers from a study-abroad program I ran this summer, handle some stuff related to a professional association that I have a leadership position in…

Like I said, I am beginning to realize (slowly, slowly) that the problem may not be my inefficiency, but more my level of overcommitment. Got to take steps to deal with that somehow…when I find the time to think about how to accomplish that…

[Posted with ecto]

12.20.2004

politics fractal

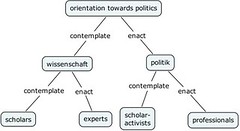

Thanks to the magic of flickr.com, and CMap tools, here's a graphical version of the dichotomy I was referring to in an earlier post concerning "orientations towards politics." This graphic makes the argument much easier to understand, I think, than the Punnett-square-esque table I had used previously.

Note that this diagram is also a static representation of an ongoing series of local debates and discussions; in theory, the same basic dichotomy (and calling this a binary dichotomy is of course an idealization; the whole diagram is ideal-typical) replicates again and again within each of the terminal nodes of the present diagram. What is fascinating to me (and to Andrew Abbott, whose analysis this is derived from) is how the same central controversy replicates over and over, and that's what I am trying to capture in this picture. One basic controversy, one commonplace on which we have all implicitly agreed to disagree; a myriad of positions to adopt with respect to it.

Of course, there are other fractals useful for making sense out of these debates too. more on those when I get this &#!*^%$ grading done and can blog about them, complete with better graphics…

[Posted with ecto]

Thanks to the magic of flickr.com, and CMap tools, here's a graphical version of the dichotomy I was referring to in an earlier post concerning "orientations towards politics." This graphic makes the argument much easier to understand, I think, than the Punnett-square-esque table I had used previously.

Note that this diagram is also a static representation of an ongoing series of local debates and discussions; in theory, the same basic dichotomy (and calling this a binary dichotomy is of course an idealization; the whole diagram is ideal-typical) replicates again and again within each of the terminal nodes of the present diagram. What is fascinating to me (and to Andrew Abbott, whose analysis this is derived from) is how the same central controversy replicates over and over, and that's what I am trying to capture in this picture. One basic controversy, one commonplace on which we have all implicitly agreed to disagree; a myriad of positions to adopt with respect to it.

Of course, there are other fractals useful for making sense out of these debates too. more on those when I get this &#!*^%$ grading done and can blog about them, complete with better graphics…

[Posted with ecto]

12.09.2004

A Modest Memo

TO: unreflective, unreflexive neopositivists of the social-scientific world

FROM: 21stCWeber

RE: your insulting, dismissive, and coercive use of the word "empirical"

It has come to my attention that for some time now y'all have been using the word "empirical" in very unusual and baffling ways. You say things like "can you prove that empirically?" when critiquing anthropologists and historians, and things like "you don't do empirical work" when referring to scholars who do discourse analysis, textual interpretation, and the like. You even say things like "now there's an example of what empirical work can get you!" when someone presents a large-n quantitative study, but make no such comment when someone presents their detailed interpretation of a series of historical documents or their richly detailed discussion of the (causal) impact of public uses of language.

News flash: "empirical" does not mean either "statistical" or "quantitative." Empirical, according to my trusty OED, means "pertaining to, or derived from, experience," although it also carries connotations of medical quackery (e.g.: proceeding to do medical treatments without a firm scientific and theoretical foundation) and an emphasis on observation as a source of knowledge. Indeed, according to the OED the word first arose in the context of a debate about whether experiments were a valid source of knowledge -- whether one could start with observation and proceed to develop knowledge on that basis. From there the word mutates slightly so as to encompass a general orientation to the world, retaining an opposition to "theory" but also undergoing several modifications in the course of fractalized disciplinary debates about the status of knowledge.

The point is that "empirical" never meant "statistical" or "quantitative" unless you were a statistician or quantitative analyst, and even then I think it meant something else. Statisticians have empirical data, but this is far from sufficient to define their endeavor; what makes a project "statistical" is how one organizes that data and what one does with it. Instead of a descriptive term, "empirical" used by a statistician as a critique of non-statistical work is just an insult, and only makes sense in the context of an overarching consensus that statistical modes of reasoning are the best guarantor of Truth.

This might -- and I stress might -- have been a sustainable position in the social sciences in the 1950s, and even then only if one ignored the work in the philosophy of science that raised doubts about precisely what the mathematical manipulation of quantitative data could achieve. But that people continue to reproduce this myth today baffles me; did y'all simply miss the last twenty or thirty years of very public debate about this?

And come on. Seriously. Are you really prepared to say that someone who goes out into the field and lives with a group of people for an extended period of time, learns about their cultural set of meanings, and then reports back in the form of an ethnographic account has no empirics? What about a diplomatic historian who uses, say, the complete record of negotiations carried out between the great Powers during the eighteenth and nineteenth centuries as the basis on from which to develop their reconstruction of events and trends? Or the discourse analyst who sifts through years and years of Congressional testimony and parliamentary debates in order to precisely track the deployment of particular rhetorical commonplaces and themes?

Sheesh. "You don't really do empirical work" my ass. Let me tell you something: "I ran regressions on a data set" is probably less empirical than scholarship that is based on fieldwork and detailed documentary analysis. Coding data is empirical work, but in that sense it is no different than participant-observation or the disclosure of central rhetorical themes or network ties. everything that uses data is "empirical." Social science is "empirical" by definition, inasmuch as it is constitutively about making claims about the world that are sustainable with evidence of some kind. That means statistical analysis, sure, but it also means interpretive and relational modes of inquiry.

[Note that there can be better and worse empirical work in all of these camps. But that's a secondary issue; just because there's some really bad interpretive work out there it does not follow that interpretive work is somehow not "empirical."]

I suggest that all of you go back and read Weber's essay on "objectivity," and then get back to me about what is and is not "empirical."

[Posted with ecto]

FROM: 21stCWeber

RE: your insulting, dismissive, and coercive use of the word "empirical"

It has come to my attention that for some time now y'all have been using the word "empirical" in very unusual and baffling ways. You say things like "can you prove that empirically?" when critiquing anthropologists and historians, and things like "you don't do empirical work" when referring to scholars who do discourse analysis, textual interpretation, and the like. You even say things like "now there's an example of what empirical work can get you!" when someone presents a large-n quantitative study, but make no such comment when someone presents their detailed interpretation of a series of historical documents or their richly detailed discussion of the (causal) impact of public uses of language.

News flash: "empirical" does not mean either "statistical" or "quantitative." Empirical, according to my trusty OED, means "pertaining to, or derived from, experience," although it also carries connotations of medical quackery (e.g.: proceeding to do medical treatments without a firm scientific and theoretical foundation) and an emphasis on observation as a source of knowledge. Indeed, according to the OED the word first arose in the context of a debate about whether experiments were a valid source of knowledge -- whether one could start with observation and proceed to develop knowledge on that basis. From there the word mutates slightly so as to encompass a general orientation to the world, retaining an opposition to "theory" but also undergoing several modifications in the course of fractalized disciplinary debates about the status of knowledge.

The point is that "empirical" never meant "statistical" or "quantitative" unless you were a statistician or quantitative analyst, and even then I think it meant something else. Statisticians have empirical data, but this is far from sufficient to define their endeavor; what makes a project "statistical" is how one organizes that data and what one does with it. Instead of a descriptive term, "empirical" used by a statistician as a critique of non-statistical work is just an insult, and only makes sense in the context of an overarching consensus that statistical modes of reasoning are the best guarantor of Truth.

This might -- and I stress might -- have been a sustainable position in the social sciences in the 1950s, and even then only if one ignored the work in the philosophy of science that raised doubts about precisely what the mathematical manipulation of quantitative data could achieve. But that people continue to reproduce this myth today baffles me; did y'all simply miss the last twenty or thirty years of very public debate about this?

And come on. Seriously. Are you really prepared to say that someone who goes out into the field and lives with a group of people for an extended period of time, learns about their cultural set of meanings, and then reports back in the form of an ethnographic account has no empirics? What about a diplomatic historian who uses, say, the complete record of negotiations carried out between the great Powers during the eighteenth and nineteenth centuries as the basis on from which to develop their reconstruction of events and trends? Or the discourse analyst who sifts through years and years of Congressional testimony and parliamentary debates in order to precisely track the deployment of particular rhetorical commonplaces and themes?

Sheesh. "You don't really do empirical work" my ass. Let me tell you something: "I ran regressions on a data set" is probably less empirical than scholarship that is based on fieldwork and detailed documentary analysis. Coding data is empirical work, but in that sense it is no different than participant-observation or the disclosure of central rhetorical themes or network ties. everything that uses data is "empirical." Social science is "empirical" by definition, inasmuch as it is constitutively about making claims about the world that are sustainable with evidence of some kind. That means statistical analysis, sure, but it also means interpretive and relational modes of inquiry.

[Note that there can be better and worse empirical work in all of these camps. But that's a secondary issue; just because there's some really bad interpretive work out there it does not follow that interpretive work is somehow not "empirical."]

I suggest that all of you go back and read Weber's essay on "objectivity," and then get back to me about what is and is not "empirical."

[Posted with ecto]

12.07.2004

Storm Clouds

Sometimes you can simply sense when a storm is brewing. Something about how the air smells, or the way that the light comes through the clouds…something subtle, but tangible. A sign that a certain amount of chaos is about to erupt. And if it's a bad storm, the possibility emerges that things will be swept away in the violence.

This semester has been a rough one for the Ph.D. program, as tensions and divisions that had been suppressed and unacknowledged have begun to manifest themselves. Whether in dissertation proposal defenses, or in the seminar in which advanced students present their works in progress, or in general conversations in the hallways, people have begun to acknowledge that there are serious divisions between the faculty about what makes for good social science methodology. After witnessing several painful sessions where students were critiqued on neopositivist grounds and were unable to defend themselves, I sent off a carefully worded message to certain key parties, setting in motion a chain of events that, suffice to say, looks an awful lot like the rumbling of thunderheads. Maybe we're finally going to have a frank discussion about this.

I admit to some trepidation, on several grounds:

In general I maintain that having the fight is better than not having it, especially when issues like the direction of the Ph.D. program are at stake. I do not imagine that we will achieve a complete consensus about how the program should be arranged, but I do think that the possibility exists for a rough consensus about what we do and what we don't really do. I think that we need to prepare students to defend their methodological choices on valid philosophical grounds, and that we need to provide them with feasible options that both cover the range of available options in the discipline and are appropriate for their projects. But even doing that, it remains the case that our program leans in one direction rather than another, and we need to stop trying to force people into a mold where they and their projects don't so much fit…if we can achieve rough consensus about that, and take certain criticisms and attacks off the table as options for people to use in public fora, I'll feel that a victory has been won.

A storm seems to be coming. Hopefully it will bring changes…hopefully we'll all survive the experience, and even prosper from it.

[Posted with ecto]

This semester has been a rough one for the Ph.D. program, as tensions and divisions that had been suppressed and unacknowledged have begun to manifest themselves. Whether in dissertation proposal defenses, or in the seminar in which advanced students present their works in progress, or in general conversations in the hallways, people have begun to acknowledge that there are serious divisions between the faculty about what makes for good social science methodology. After witnessing several painful sessions where students were critiqued on neopositivist grounds and were unable to defend themselves, I sent off a carefully worded message to certain key parties, setting in motion a chain of events that, suffice to say, looks an awful lot like the rumbling of thunderheads. Maybe we're finally going to have a frank discussion about this.

I admit to some trepidation, on several grounds:

- I don't have tenure yet. Big concern -- what if this goes horribly wrong?

- I have seen departments ripped apart by fights over methodology, and know of others that fared even worse. Not quite sure how precisely to prevent that from happening here.

- Combat takes time. And time is not something I have in abundance.

In general I maintain that having the fight is better than not having it, especially when issues like the direction of the Ph.D. program are at stake. I do not imagine that we will achieve a complete consensus about how the program should be arranged, but I do think that the possibility exists for a rough consensus about what we do and what we don't really do. I think that we need to prepare students to defend their methodological choices on valid philosophical grounds, and that we need to provide them with feasible options that both cover the range of available options in the discipline and are appropriate for their projects. But even doing that, it remains the case that our program leans in one direction rather than another, and we need to stop trying to force people into a mold where they and their projects don't so much fit…if we can achieve rough consensus about that, and take certain criticisms and attacks off the table as options for people to use in public fora, I'll feel that a victory has been won.

A storm seems to be coming. Hopefully it will bring changes…hopefully we'll all survive the experience, and even prosper from it.

[Posted with ecto]